Kubernetes: Building the New Cluster

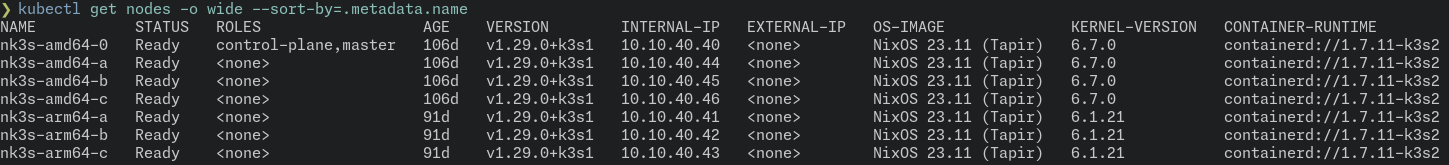

I am just a little slow at writing blog posts, but you know, thats alright. As I am sure you can tell from the image above, the cluster has been running in the new configuration for a few months at this point and besides some non-hardware specific issues, it has been a good transition. My server rack is a good bit quieter since being able to shut down the old HP server and probably running on a few less watts as well. While I did lose my cluster age counter being over 950 days in the process, the cluster is still the exact same one I started with as each component has been replaced Ship of Theseus style in the process.

The new primary worker nodes are all (now superseded by a similar model) MINISFORUM UN100C mini-PCs, sporting an Intel N100 processor, 16GB ram, 512GB M.2 SATA-SSDs, and a HPE SM863A 960GB SSD. I got these on sale for about $160 each via Amazon, though they are no longer available there now. They are not super powerful, and have a bit less ram than my virtual nodes, but thanks to process node improvement in the last decade, they are (combined) more powerful than my beast of a server for the same node count. The 512GB M.2 SSD will be used as the boot drive while the HPE SSD will be dedicated for the Ceph cluster via Rook/Ceph.

With this project I decided to dive deeper into using Nix/NixOS on all my devices so these nodes got their own fresh install of NixOS as is documented in my nixos-confiugration repository. I started with the master node, which I decided to keep virtual, given the excess compute I have available on my NAS, and did a backup/restore of k3s following the official documentation for what to move over. The great part about this, sans losing my cluster age because it was technically a new master, was that all my workloads ran throughout the initial master move without interruption.

Having the master node now setup in its new home, I moved on to the worker nodes. My plan involved reducing my workloads to the essentials and to a level where I could take two of the virtual nodes offline and not have any services interrupted. This was a little bit of insurance as though I only planned to have one node down at a time, I wanted to be sure I could handle an “oopsie”. These virtual nodes were my main Ceph nodes, so my main plan was to follow the Ceph OSD Management documentation and bring down one virtual node, replace it with one of the UN100Cs, and let the Ceph cluster rebuild and get to a healthy state before moving onto the next node. The process turned out to be mostly a waiting game with Ceph to make sure I didn’t get into a bad state and after an afternoon of tinkering I was up and running with all physical hardware for my Ceph cluster and primary worker nodes.

Next in the list of things to do was to migrate the RaspberryPi nodes over to NixOS from their current Ubuntu install. I wanted to have it be a UEFI install, just like all the other hardware I have. While I did actually get NixOS on there via the shared-image steps documented in my configuration repo, I found I was going to have to maintain my own hardware tweaks and kernel modules to get the PoE hat working 100% (primarily the fan not functioning without a specific kernel module). Using the community maintained nixos-hardware modules really only supports the more traditional boot method for single board computers via extlinux. As I didn’t want to have to fight the nodes a lot, I did end up following a more traditional install approach and utilized the nixos-hardware modules for RaspberryPi.

With the RaspberryPi nodes moved over, my ship was rebuilt and all the same workloads were still running uninterrupted. There were a few more tweaks to make, especially since some installs like system upgrade controller and kured did not work out of the box with NixOS. I ended up removing system upgrade controller as I handling that process out of band to the cluster itself. As for kured, I brainstormed with a peer also migrating a node to NixOS and a system was devised to use a systemd service to recognize when a reboot was required and replicate a file indicator that the kured plan could use.

If you are interested in running your own cluster at home, I recommend joining the Home Operations discord community where I lurk occasionally and many more smart people are active and helpful. There are a plethora of setups to choose from and a lot of resources there to help along the way.